The rapid advancement of Artificial Intelligence (AI) offers transformative capabilities, but it also presents significant security challenges. As AI models grow in value, safeguarding them from unauthorized access, tampering, and intellectual property theft becomes paramount. Furthermore, deploying AI inferences at the edge, where security controls are often weaker, introduces a complex layer of vulnerability.

In this blog post, we’ll outline a practical use-case scenario that we used to test and validate a robust solution architecture designed to address these challenges, leveraging the power of ARCA Trusted OS.

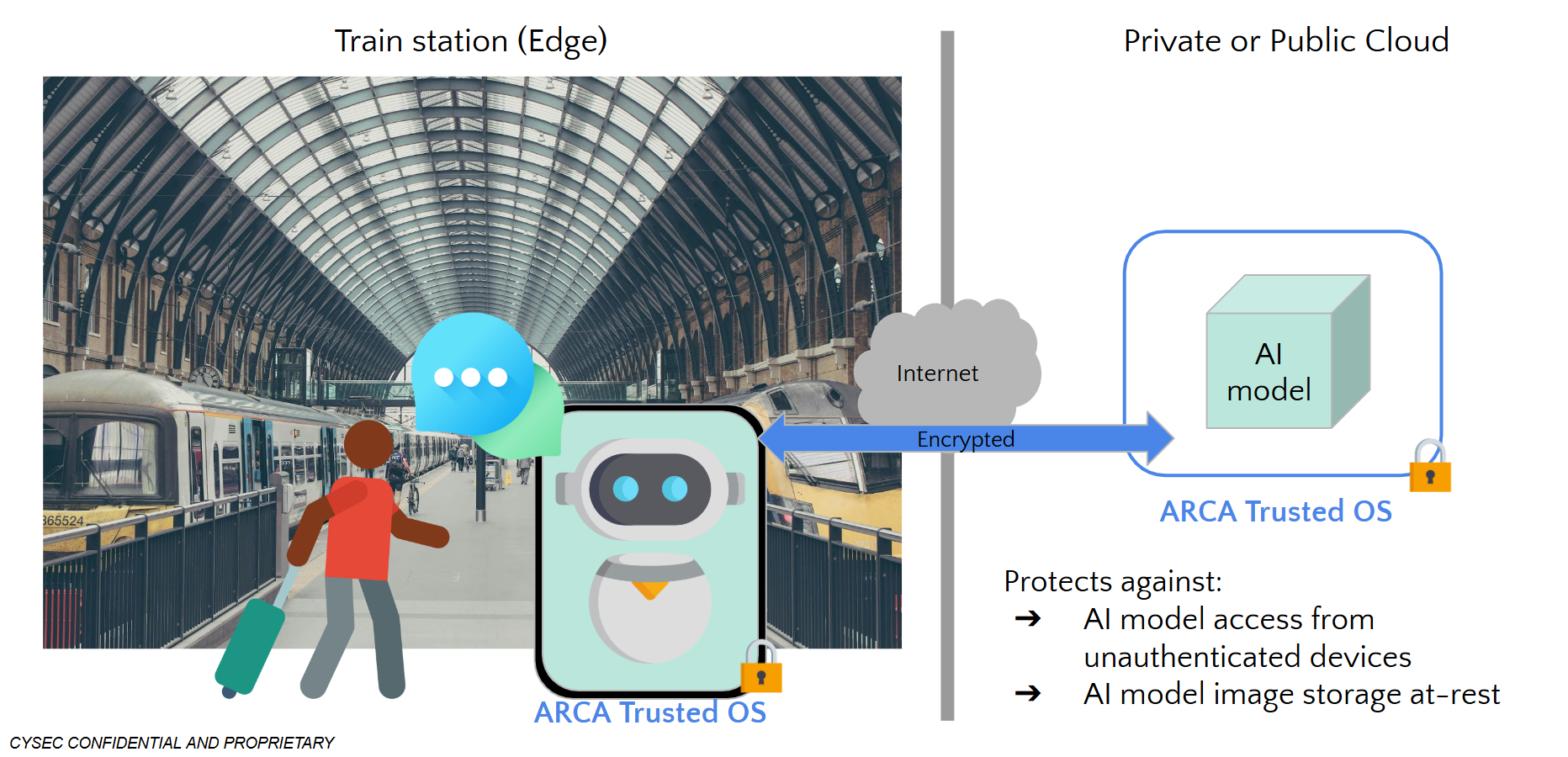

Use-Case Scenario: Securing AI-Based Support Services in Train Stations

Railway companies are increasingly motivated to implement AI to create more efficient, safe, and pleasant travel experiences, while reducing operational costs. A common approach involves hosting the core AI model within their central IT infrastructure, while deploying user interfaces on edge devices at each train station. However, these public spaces present unique security risks. The limited physical control over edge devices exposes them to potential theft and tampering, which could compromise the confidentiality and integrity of the entire AI-based service.

The Challenge: Protecting AI in a Vulnerable Edge Environment

Imagine a railway company that has developed a cutting-edge Large Language Model (LLM) to power its AI-based support services. They want to offer inference services to passengers at the edge, but must address critical security concerns:

- Model Confidentiality: Protecting their valuable LLM intellectual property from unauthorized access.

- Data Integrity: Ensuring that inferences are based on unaltered, trusted models.

- Secure Execution: Safeguarding pre-shared secrets and inference processes in potentially hostile edge environments.

Our Solution: ARCA Trusted OS for Enhanced AI Security at the Edge

To address these challenges, we’ve developed a solution architecture centered on ARCA Trusted OS, our specialized operating system designed for heightened security in edge deployments. We validated this architecture through a Proof of Concept (PoC) involving an open-source LLM. The LLM was securely hosted in the cloud, while inference requests were handled from edge devices running ARCA Trusted OS.